External Secret Operator with Doppler

Last time we configured our cluster step by step maybe without public code yet, but someday I will publish it. Probably when it will be smooth enough to share. Nevertheless, we have a working cluster. Today I will focus on connecting the External Secret Operator with Doppler. So let's introduce today's stars.

External Secret Operator

External Secrets Operator is a Kubernetes operator that Integrates external secret management systems like AWS Secrets Manager, HashiCorp Vault, Google Secrets Manager, Azure Key Vault, IBM Cloud Secrets Manager, CyberArk Conjur, Pulumi ESC and many more. The operator reads information from external APIs and automatically inject the values into a Kubernetes Secret.

Doppler

Doppler integrates with popular CI/CD tools and frameworks, making it easy to automate secrets management within your development workflow.

Fast guide over platform

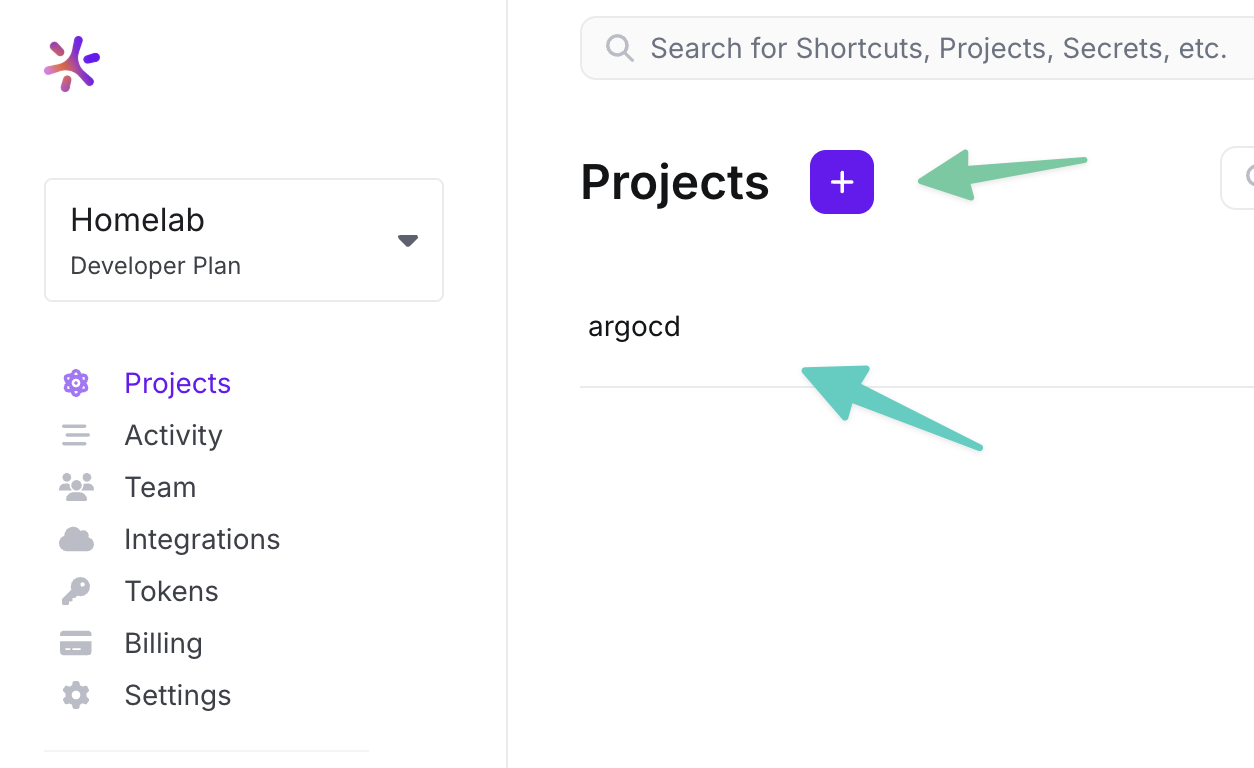

Create a project

Adding new project is very simple, and easy. Just click on

+button near to theProjectstext.

View of initial dashboard without projects Manage stages

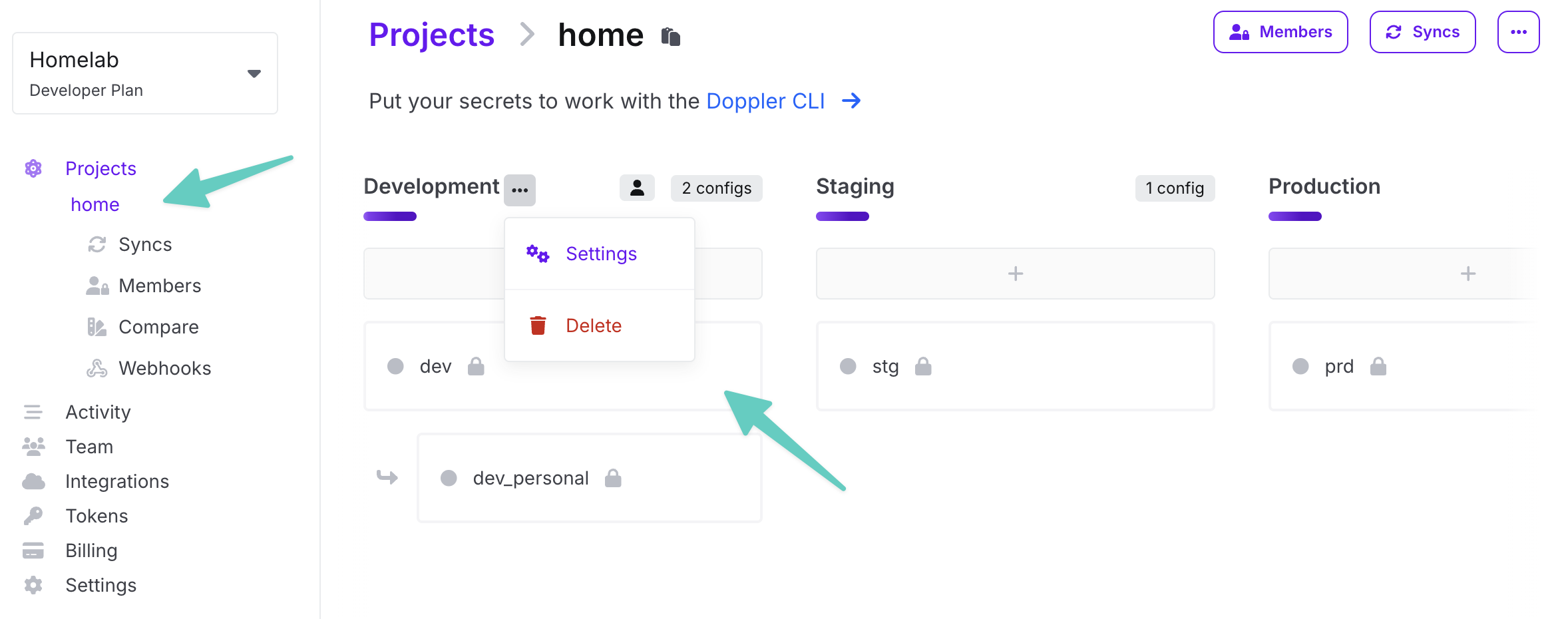

Here we can add, delete, or modify stages. At the beginning, I would like to recommend using only one stage.

View on default stages' dashboard Adding secrets

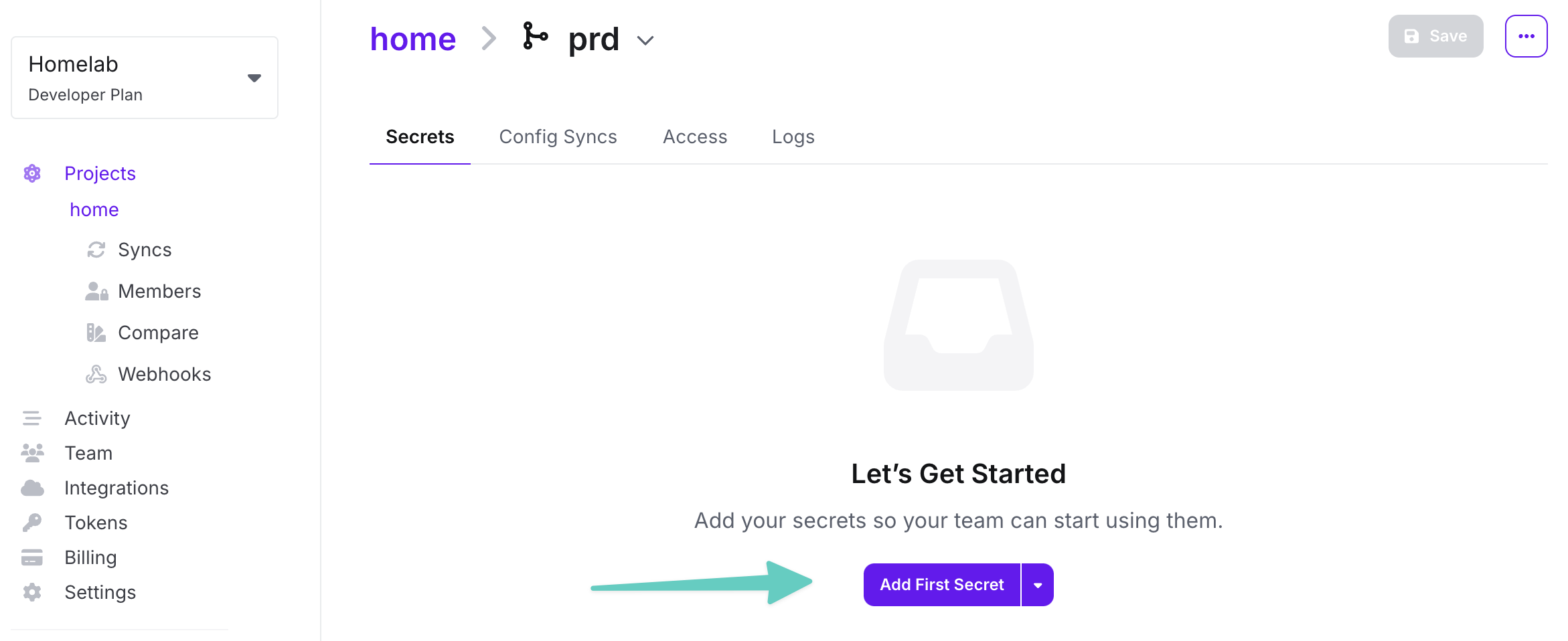

Then after clicking on the stage name, we can add next secret with two-click.

Regular secret view Getting a token

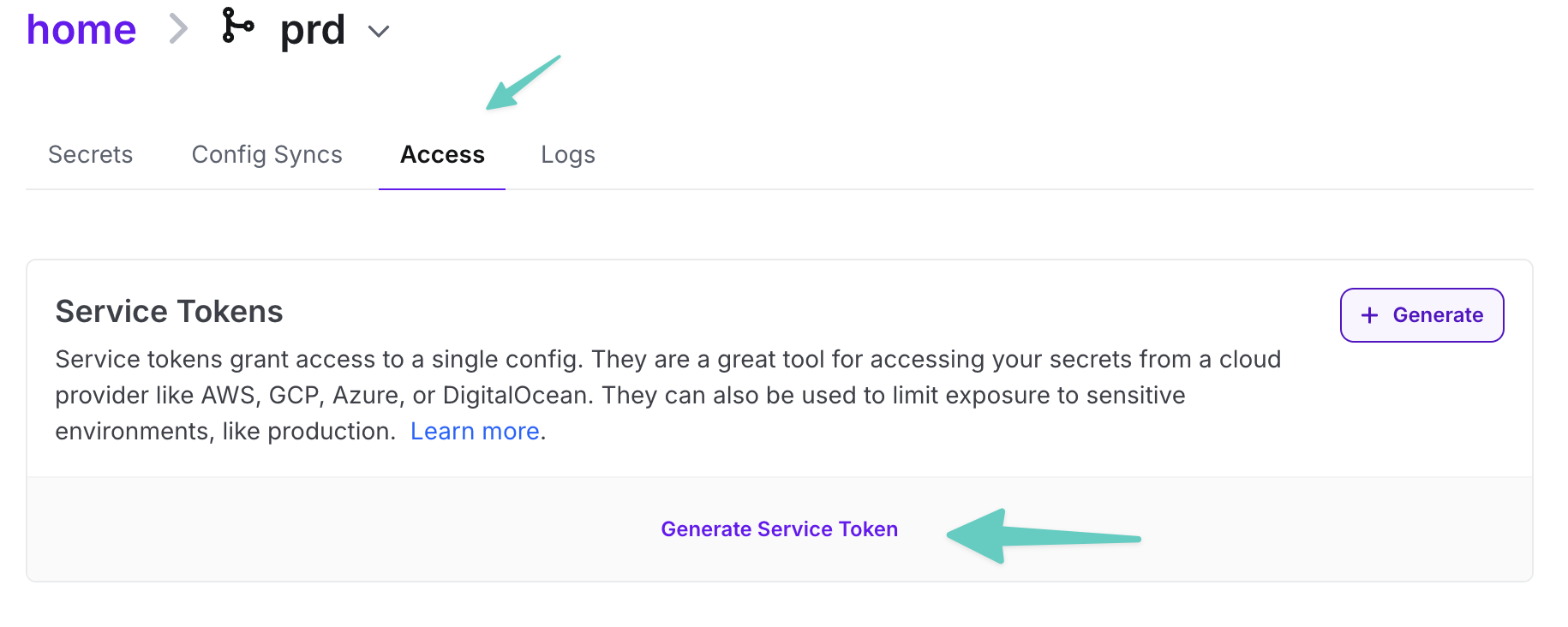

The last action to do is token generation. To archive it, just go to *Access tab, then generate a token, that will have access to particular config.

View of access tab

How to use this duet

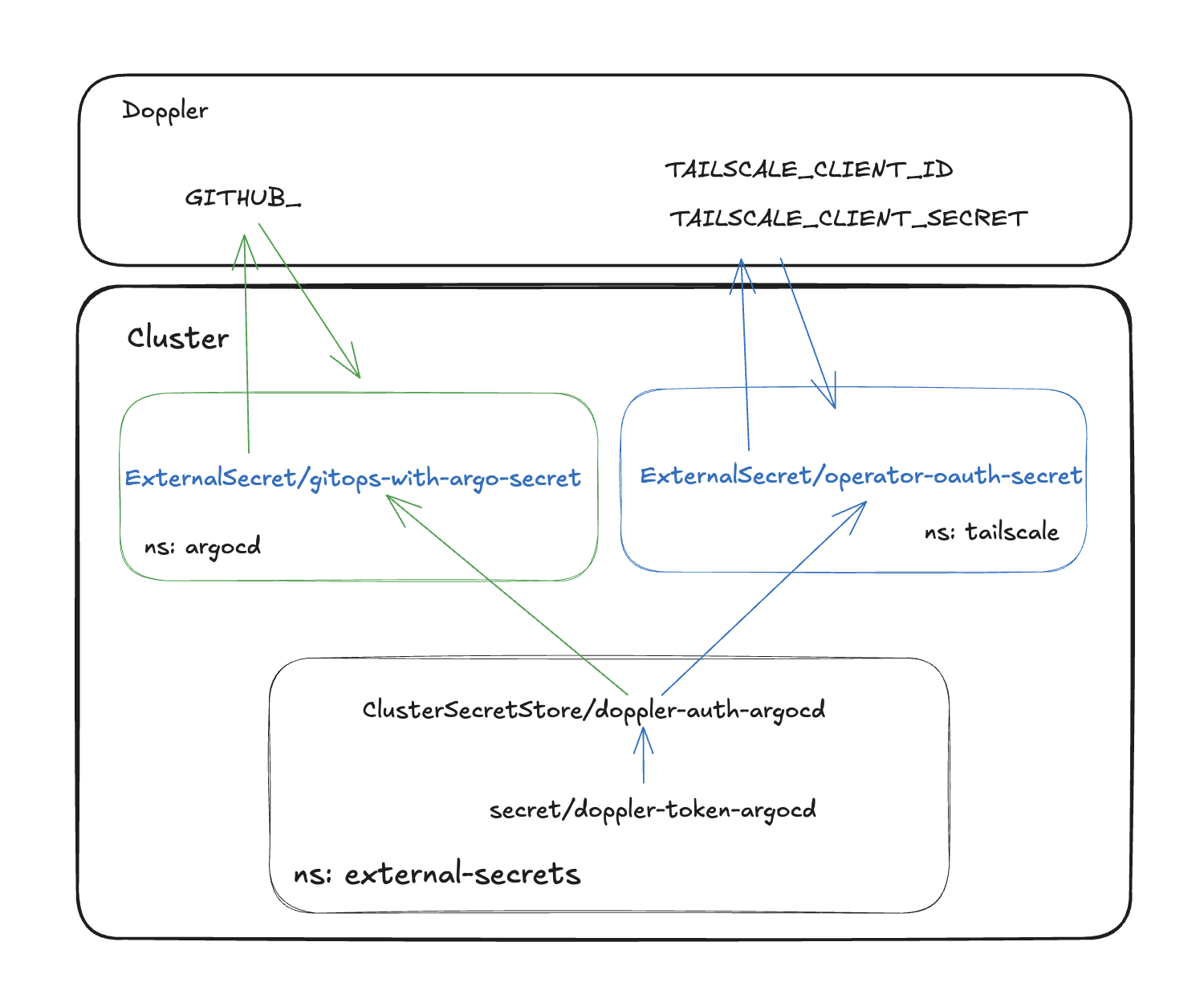

First, we need to understand how it works. In my cluster, it looks as shown in the picture below:

|

|---|

| Flow diagram of ESO integration with Doppler and kluster |

As you may see, we need to have one "static secret" in our cluster, we can make it from CLI directly or with the usage of sops, however, the effect will be the same, where we need to start. I decided that my workstation terminal session is a good place to create the main secret.

When we have our token, we can call Doppler and retrieve needed secrets. In my case, I'm using one Doppler's project for everything and all namespaces have access to my token, but we can restrict it on the RBAC level if needed. Or not, that is why we're using ESO.

Retrieving secrets

In my opinion, we have at least 2 modes of getting credentials, that should meet all our needs.

Getting all prefixed secrets.

1--- 2apiVersion: external-secrets.io/v1beta1 3kind: ExternalSecret 4metadata: 5 name: hello 6 namespace: hello 7 labels: 8 app.kubernetes.io/component: externalsecret 9 app.kubernetes.io/part-of: hello 10 app.kubernetes.io/managed-by: Kustomize 11spec: 12 refreshInterval: 6h 13 secretStoreRef: 14 name: doppler-auth-argocd 15 kind: ClusterSecretStore 16 dataFrom: 17 - find: 18 path: HELLO_With this code, we're fetching all secrets with prefix

HELLO_, into a single secret with the name hello. And that's it. If you want to check the result of your integration just run:1kubectl get secrets hello \ 2 -o jsonpath="{.data.HELLO_SECRET}" \ 3 -n hello | base64 --decodeGetting particular secret and putting them into a specific order

1--- 2apiVersion: external-secrets.io/v1beta1 3kind: ExternalSecret 4metadata: 5 name: hello 6 namespace: hello 7 labels: 8 app.kubernetes.io/component: externalsecret 9 app.kubernetes.io/part-of: hello 10 app.kubernetes.io/managed-by: Kustomize 11spec: 12 refreshInterval: 6h 13 secretStoreRef: 14 name: doppler-auth-argocd 15 kind: ClusterSecretStore 16 target: 17 name: hello-secret 18 creationPolicy: Owner 19 template: 20 data: 21 APP_SECRET: "{{ .HELLO_SECRET }}" 22 INIT_PASSWORD: "{{ .HELLO_INIT_PASSWORD }}" 23 dataFrom: 24 - find: 25 path: HELLO_This will create

secret/hello-secretwith a specific object structure. When it could be helpful? I will show you 3 interesting examples, that you could use in your setup.Adding Cloudflare tunnel config file

Cloudflare tunnels require providing data in a specific format. It's a JSON string, looking similar to:

1{ 2 "AccountTag": "f3cc889ae810", 3 "TunnelSecret": "SVD/O0sU+VtDlH023aBcToG0dMk=", 4 "TunnelID": "8127899-c332-482c-91aa-d1210a479658" 5}And you need to use exactly this as a file-based secret. Then you have two option get your prefixed secrets into one object, then

credentials.jsoninto second, or use templates.1--- 2apiVersion: external-secrets.io/v1beta1 3kind: ExternalSecret 4metadata: 5 name: hello 6 namespace: hello 7 labels: 8 app.kubernetes.io/component: externalsecret 9 app.kubernetes.io/part-of: hello 10 app.kubernetes.io/managed-by: Kustomize 11spec: 12 refreshInterval: 6h 13 secretStoreRef: 14 name: doppler-auth-argocd 15 kind: ClusterSecretStore 16 target: 17 name: hello-secret 18 creationPolicy: Owner 19 template: 20 data: 21 credentials.json: "{{ .HELLO_TUNNEL }}" 22 APP_SECRET: "{{ .HELLO_APP_SECRET }}" 23 DB_SECRET: "{{ .HELLO_DB_SECRET }}" 24 DATABASE_URL: "{{ .HELLO_DB_URL }}" 25 dataFrom: 26 - find: 27 path: HELLO_ 28---Building ArgoCD repository secret.

If you have worked with ArgoCD in the past, there is a chance, that you know that problem is not trivial. You need to use build object with type secret, with repository URL, user, and secret. As you can't have a nested secret (secret ref in another secret), you are forced to keep the whole file secret. Fortunately, you can use ESO templates.

1--- 2apiVersion: external-secrets.io/v1beta1 3kind: ExternalSecret 4metadata: 5 name: gitops-with-argo-secret 6 namespace: argocd 7spec: 8 refreshInterval: 6h 9 secretStoreRef: 10 name: doppler-auth-argocd 11 kind: ClusterSecretStore 12 target: 13 name: gitops-with-argo 14 creationPolicy: Owner 15 template: 16 type: Opaque 17 metadata: 18 labels: 19 argocd.argoproj.io/secret-type: repository 20 data: 21 type: git 22 url: https://github.com/3sky/argocd-for-home 23 username: 3sky 24 password: "{{ .password }}" 25 data: 26 - secretKey: password 27 remoteRef: 28 key: GITHUB_TOKENAt the end, simple, direct Tailscale mapping

1--- 2apiVersion: external-secrets.io/v1beta1 3kind: ExternalSecret 4metadata: 5 name: operator-oauth-secret 6 namespace: tailscale 7spec: 8 refreshInterval: 6h 9 secretStoreRef: 10 name: doppler-auth-argocd 11 kind: ClusterSecretStore 12 target: 13 name: operator-oauth 14 creationPolicy: Owner 15 template: 16 data: 17 client_id: "{{ .client_id }}" 18 client_secret: "{{ .client_secret}}" 19 data: 20 - secretKey: client_id 21 remoteRef: 22 key: TAILSCALE_CLIENT_ID 23 - secretKey: client_secret 24 remoteRef: 25 key: TAILSCALE_CLIENT_SECRET

Summary

Based on my testing and usage of this integration, so far so good. Stable, not very resource-consuming, we can limit sync intervals. Doppler as mentioned at the beginning is free for small projects, or individual users. However, using ESO with popular enterprise solutions like Hashicorp Vault should be very similar. Maybe I will check it someday.

I hope you like this kind of article, smaller, focused on a particular problem or integration. If not, let me know.