Production-Ready n8n on AWS

That will be another microblog-style post about self-hosting on AWS. This time, let's talk about n8n - the workflow automation tool that's been gaining traction in the no-code/low-code world. After successfully deploying GoToSocial on Lightsail (which you can read about here), I decided to tackle something more useful: a production-ready n8n setup that doesn't break the bank.

Why n8n?

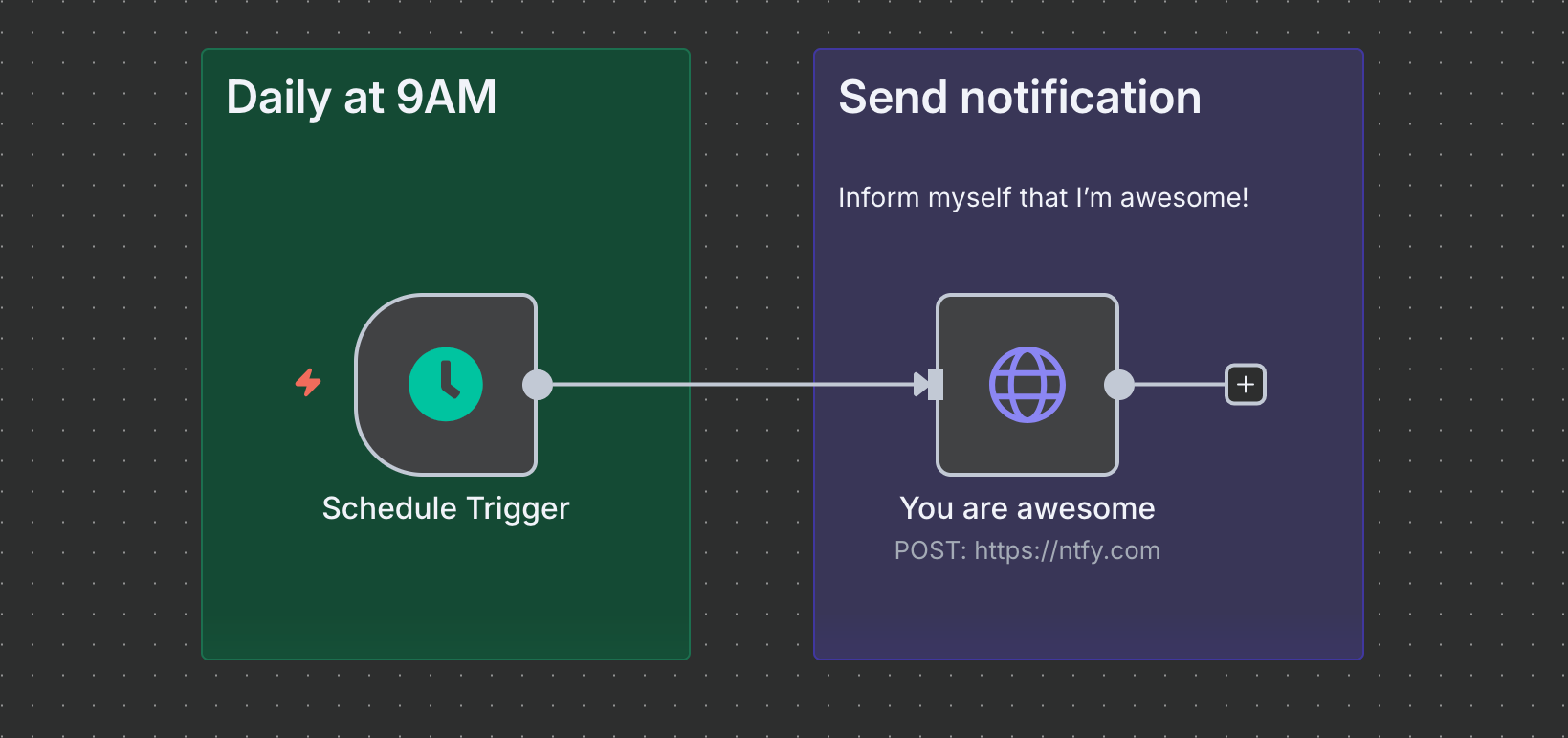

n8n is an open-source workflow automation platform that lets you connect different services and APIs. Think Zapier, but self-hosted and with more control. Perfect for automating repetitive tasks, data synchronization, or building complex integrations between your tools.

The problem? Most tutorials show you the "docker run" approach, which is great for testing but terrible for production. Today, we're doing this properly - with security and maintenance in mind.

The Challenge: IPv6 Limitations

Unlike my previous GoToSocial setup, n8n presented a unique challenge. While I'd love to use IPv6-only deployment for cost savings, n8n's webhook functionality and third-party integrations often struggle with dual-stack configurations. Many APIs and services still don't play nicely with IPv6, causing connection issues that are hard to debug. At least at the begining, when I noticed that another service, has issue with dual-stack, I just decided that time spend on solving those issues, is more vauble than 4$ per month.

So, we're sticking with IPv4 for now - but keeping the setup lean and cost-effective.

Infrastructure Setup

For the AWS infrastructure part - again Lightsail - I'm using the same Terraform approach from my previous GoToSocial post. Check out that post for the complete Terraform setup. The infrastructure code is also available in my blog-bin repository.

Why? It's easy - costs! As N8N in community version is rather, monolithic app(not sure about enterprice), autoscaling, or things like that are just hard to apply, at first we need to monitor our app. To undestand our needs! For me cheap 1G of RAM was not enoght, 4GB is much smoother for my scale(around 10 active workflows).

Then, why not use RDS? Again costs, as well as my 'as code' approth. I don't really care about "execution log", as well as everything is ok. Workflows are stored as code, an backuped, credentionals as well, exported and stored in external vault.

At the end Cloudflare, as I'm using it quite heavly, also it comes with a lot of enpoint monitoring benefits, ceritificate managment and tunnels, that is why I feel much safer with it :)

Ah and in case of SSH, the same story as with GoToSocial, Tailscale for direct access. No direct inbound connection to my VM.

Docker Compose: The Right Way My Way

Once your Lightsail instance is running, we need to install n8n properly. Here's my Docker Compose setup that handles everything, so N8N and Tailscale. And yes, it's overcomplicated, however I decided, that I want to be able tweak a lot of properties without touchinf docker-compose structrues. I have healtchecks implemented as well.

1# templates/docker-compose.n8n.yml.j2

2

3name: {{ n8n_project_name | default('n8n') }}

4

5services:

6 n8n:

7 image: n8nio/n8n:{{ n8n_version | default('1.51.1') }}

8 container_name: {{ n8n_container_name | default('n8n') }}

9 restart: unless-stopped

10 user: "{{ n8n_user_id | default('1000') }}:{{ n8n_group_id | default('1000') }}"

11 ports:

12 - "{{ n8n_bind_address | default('127.0.0.1') }}:{{ n8n_port | default('5678') }}:5678"

13 volumes:

14 - n8n_data:/home/node/.n8n

15 environment:

16 - N8N_HOST={{ n8n_host | default('n8n.example.com') }}

17 - N8N_PORT=5678

18 - N8N_PROTOCOL={{ n8n_protocol | default('https') }}

19 - NODE_ENV={{ node_env | default('production') }}

20 - WEBHOOK_URL={{ webhook_url | default('https://n8n.example.com') }}

21 - N8N_USE_DEPRECATED_REQUEST_LIB={{ n8n_use_deprecated_lib | default('true') }}

22 - N8N_LOG_LEVEL={{ n8n_log_level | default('info') }}

23 - N8N_METRICS={{ n8n_metrics | default('false') }}

24 - N8N_SECURE_COOKIE={{ n8n_secure_cookie | default('true') }}

25 - N8N_ENCRYPTION_KEY=${N8N_ENCRYPTION_KEY}

26 - N8N_RUNNERS_ENABLED={{ n8n_runners_enabled | default('true') }}

27 - N8N_ENFORCE_SETTINGS_FILE_PERMISSIONS={{ n8n_enforce_settings_permissions | default('true') }}

28{% if n8n_additional_env_vars is defined %}

29{% for env_var in n8n_additional_env_vars %}

30 - {{ env_var }}

31{% endfor %}

32{% endif %}

33 healthcheck:

34 test: ["CMD-SHELL", "wget --no-verbose --tries=1 --spider http://localhost:5678/healthz || exit 1"]

35 interval: {{ n8n_health_interval | default('30s') }}

36 timeout: {{ n8n_health_timeout | default('10s') }}

37 retries: {{ n8n_health_retries | default('3') }}

38 start_period: {{ n8n_health_start_period | default('40s') }}

39 depends_on:

40 - tunnel

41 networks:

42 - {{ network_name | default('n8n-network') }}

43 logging:

44 driver: "json-file"

45 options:

46 max-size: "{{ n8n_log_max_size | default('10m') }}"

47 max-file: "{{ n8n_log_max_files | default('3') }}"

48

49 tunnel:

50 image: cloudflare/cloudflared:{{ cloudflared_version | default('2024.8.2') }}

51 container_name: {{ tunnel_container_name | default('cloudflared-tunnel') }}

52 restart: unless-stopped

53 user: "{{ tunnel_user_id | default('65532') }}:{{ tunnel_group_id | default('65532') }}"

54 volumes:

55 - tunnel_config:/etc/cloudflared:ro

56 command: {{ tunnel_command | default('tunnel --no-autoupdate run') }}

57 environment:

58 - TUNNEL_TOKEN=${TUNNEL_TOKEN}

59{% if tunnel_additional_env_vars is defined %}

60{% for env_var in tunnel_additional_env_vars %}

61 - {{ env_var }}

62{% endfor %}

63{% endif %}

64 healthcheck:

65 test: ["CMD-SHELL", "cloudflared tunnel info || exit 1"]

66 interval: {{ tunnel_health_interval | default('60s') }}

67 timeout: {{ tunnel_health_timeout | default('10s') }}

68 retries: {{ tunnel_health_retries | default('3') }}

69 start_period: {{ tunnel_health_start_period | default('30s') }}

70 networks:

71 - {{ network_name | default('n8n-network') }}

72 logging:

73 driver: "json-file"

74 options:

75 max-size: "{{ tunnel_log_max_size | default('5m') }}"

76 max-file: "{{ tunnel_log_max_files | default('3') }}"

77

78networks:

79 {{ network_name | default('n8n-network') }}:

80 driver: bridge

81 name: {{ network_name | default('n8n-network') }}

82

83volumes:

84 n8n_data:

85 name: {{ n8n_volume_name | default('n8n_data') }}

86 tunnel_config:

87 name: {{ tunnel_volume_name | default('n8n_tunnel_config') }}

Environment file (templates/n8n.env.j2):

1N8N_ENCRYPTION_KEY={{ n8n_encryption_key }}

2TUNNEL_TOKEN={{ tunnel_token }}

Vars file at group_vars/aws/all.yml

1# Host

2ssh_port: 22

3docker_compose_version: "2.24.5"

4

5# Containers

6n8n_version: 1.102.3

7tunnel_command: 'tunnel run'

8cloudflared_version: latest

I know, i'm using tag latest at cloudflare, but to be honest, they deliver new version each month, and it has always been stable enoght. So i'm finr with it.

Then my vault file group_vars/aws/vault.yaml

1## N8N

2vault_n8n_encryption_key: "NEcIZR+seLCfawL3+SNYtD8SlBNLGBR5J21z6aT/U5Y="

3n8n_host: 'n8n.example.com'

4webhook_url: 'https://n8n.example.com'

5vault_tunnel_token: "OWMf9aBbNFh4qxxPcKcOCdeb5I6o9PHbwoSQpc0AWiwLFtilaNWzMOzybyTa2B3qCZX4pG7dlidSUm9sOdT7+d8ALYj+DG0MfB2YPBqX4q/WfQ1bgIHnxi+hYNzA0aDMA9ssuA=="

6

7

8## general info

9main_user: jwolynko

10main_password: 'jC2hCOQvqoN6CStY7mAY6pdnnPWHVZDfFJxkLXrI7+4='

11user_public_keys:

12 - "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIJxYZEBNRLXmuign6ZgNbmaSK7cnQAgFpx8cCscoqVed local"

13 - "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQCfLtBYtg6ulr1qF0crYDhEcHkWvxTRECel7Z1it2bV81+pNpXuEKMKHrL4hn4RBLFe0+kIZr9xQN9NG0B+eErtY9Cc2+dSouszDsvC7vMWYkynh6c8oQutcVCvGPKYSh5SgJLrNOOrAXRyHP7Gtj1Nnoih/Ci32JSEt9s8V6ojaSc/xUbFbhvCFuVDHJ7hWf59gk91KjaeuS7cQcdX6/QpwsITFkd3j77rPU31rW/YDJycwQzgMRw7gPzgo/qKVHde7QY1fvrl1JG1rdogLIZeGCAd3ljgrk8pIVQoTAFxBWnSyQHEVSUiLniXfDOIYbss8SFNxe3loFZ44jWjG/KbIlATKXM2iOA3SsSJBI4AjrjoQAy6til3t0C+TE18q4Ip2mSa1YXPYPZ4tFyHohP1DLEDeq4KQeecoZHjkzUTb0aDaEWwAtV2PBnxu7lwQNqBMgiTLN52s9zPl11kfVAkbDzugVJKIiqm/m2IkamlCRlmjf6Gs/WA/wwx0XMab6zmvKiMnjeLJzqcKA0O2pC8yN3f4kL1OJ/5W80bX9HhzR5JslpD3DQMK7f8XaMynBYdTiixD2SJndJvbdcGulxMaednCj03+EWw7fTauc38HfADp19L+lFBf3eIA3KWojXR+f4veLY3Ktnzzm0HI2/hKFu6pQntl4VdshdeuNa4RQ== backup"

Then I have my quite simple playbook:

1---

2- name: Deploy N8N with Docker Compose

3 hosts: aws

4 become: yes

5 vars:

6 n8n_directory: "/opt/n8n"

7 n8n_encryption_key: "{{ vault_n8n_encryption_key | default('your-32-char-encryption-key-here') }}"

8 tunnel_token: "{{ vault_tunnel_token }}"

9

10 tasks:

11 - name: Create n8n directory

12 ansible.builtin.file:

13 path: "{{ n8n_directory }}"

14 state: directory

15 mode: '0755'

16 owner: root

17 group: root

18

19 - name: Create .env file for Docker Compose

20 ansible.builtin.template:

21 src: n8n.env.j2

22 dest: "{{ n8n_directory }}/.env"

23 mode: '0600'

24 owner: root

25 group: root

26 notify: restart n8n

27

28 - name: Create /etc/docker directory

29 file:

30 path: /etc/docker

31 state: directory

32 mode: '0755'

33

34

35 - name: Install required packages

36 ansible.builtin.package:

37 name:

38 - python3-pip

39 state: present

40 update_cache: yes

41

42 - name: Deploy docker-compose.yml from template

43 ansible.builtin.template:

44 src: docker-compose.n8n.yml.j2

45 dest: "{{ n8n_directory }}/docker-compose.yml"

46 mode: '0644'

47 owner: root

48 group: root

49 notify: restart n8n

50

51 - name: Configure ping group range for cloudflared

52 ansible.posix.sysctl:

53 name: net.ipv4.ping_group_range

54 value: "65532 65534"

55 state: present

56 sysctl_file: /etc/sysctl.d/99-cloudflared.conf

57 reload: true

58

59 - name: Start Docker service

60 ansible.builtin.systemd:

61 name: docker

62 state: started

63 enabled: yes

64

65 - name: Deploy N8N with Docker Compose

66 community.docker.docker_compose_v2:

67 project_src: "{{ n8n_directory }}"

68 state: present

69 pull: missing

70 remove_orphans: yes

71 env_files: "{{ n8n_directory }}/.env"

72 register: compose_result

73

74 - name: Show deployment result

75 ansible.builtin.debug:

76 var: compose_result

77

78 handlers:

79 - name: restart n8n

80 community.docker.docker_compose_v2:

81 project_src: "{{ n8n_directory }}"

82 state: present

83 env_files: "{{ n8n_directory }}/.env"

Inventory file (inventory.yml):

1aws:

2 hosts:

3 n8n:

4 ansible_python_interpreter: python3

Where I'm using regular reference from ~/.ssh/config.

Deployment

Running the deployment is straightforward:

1# Clone your setup

2git clone https://codeberg.org/3sky/micro-n8n.git

3cd micro-n8n

4

5# Make a vault and populate with you data

6ansible-vault create group_vars/aws/vault.yaml

7

8# Execute the playbook as root, to prep system

9ansible-playbook -i inventory.yaml baseline.yaml --ask-vault-pass

10

11# Execute the playbook as user, to install n8n

12ansible-playbook -i inventory.yaml deploy-n8n.yaml --ask-vault-pass -K

What to do when new version come in? Just update group_vars/aws/all.yaml and trigger:

1ansible-playbook -i inventory.yaml deploy-n8n.yaml --ask-vault-pass -K

Security Considerations

This setup includes several security layers:

- Lightsail firewall: No inbound connection allowed, after initial configuration

- Docker isolation: Services run in isolated containers

- Secrets management: Environment variables, not hardcoded values

- Ansible Vault: For storing secret in some kind encrypted solution

Overall Consideration

This setup my be diffrent from my other solutions:

- Ubuntu: I'm not using CentOS here, I want to use docker in more simple way. Why? My idea was to deliver it to customer and give them to manage. And Ubutnu with Docker is simpler than CentOS and Podman quadlets.

- Lightsail: Again costs, and simplecity. It could be anything else based on customer needs. It must be easy to migrate, replicate, rebuild.

- Simple playbooks: Assumtion was to deliver bussines value fast, and in stable way. The only important think is N8N version, as we want to keep it fresh all the time - endpoints are exposed to public internet.

- VPN: For production setup, using diffrent VPN solution is very possible, that is why it's not covered here.

- Cloudflare: Ok, here we can use Nginx, but again network constrains, etc. And exposing application directly to interent is always thing to consider twice.

Cost Breakdown

Here's the real monthly cost:

- Lightsail with 4GB of RAM and IPv4: $24/month

- Total: ~$24/month

Almost cheaper than Zapier Pro at $20/month or hosted N8N, and you get unlimited workflows! Also you can go with smaller instance first, and see your usage.

What's Next?

This post covered the foundation - getting n8n running securely on AWS with proper automation. In the next part, we'll dive into:

- Monitoring and Alerting: CloudWatch integration, custom metrics, and alerting strategies

- Backup and Recovery: Automated database backups and disaster recovery procedures

- Advanced Security: WAF integration, VPC endpoints, and compliance considerations

Summary

We've built a production-ready n8n deployment using modern DevOps practices. The key takeaways:

- Infrastructure as Code: Terraform for AWS resources

- Configuration Management: Ansible for reproducible deployments

- Container Orchestration: Docker Compose for service management

- Security First: SSH, firewalls, and proper secret management

- Cost Optimization: Under $40/month for a production-ready setup with AI tokens

The complete code is available in my micro-n8n repository. This setup is battle-tested - I'm using it for client automation projects and it's been rock-solid.

Self-hosting n8n gives you complete control over your workflows, data, and integrations. Plus, at under $25/month, it's significantly cheaper than most SaaS alternatives once you start scaling.

Host your own automation, it's simpler than you think.

Quick Note on Snippet Management

At the end, I wanted to mention that I've finally found my gold standard for snippet management: NextCloud Notes with Neovim. The combination of cloud sync, instant share, markdown support, and vim keybindings has transformed my consultant workflow. I'll be writing a dedicated post about this setup soon - it's a game-changer for anyone juggling multiple projects and code snippets.